OpenSentinelMap: A Large-Scale Land Use Dataset using OpenStreetMap and Sentinel-2 Imagery

- AuthorsNoah Johnson, Wayne Treible, Daniel Crispell

- Date25 April 2022

- SourceEarthVision 2022 (CVPR Workshop)

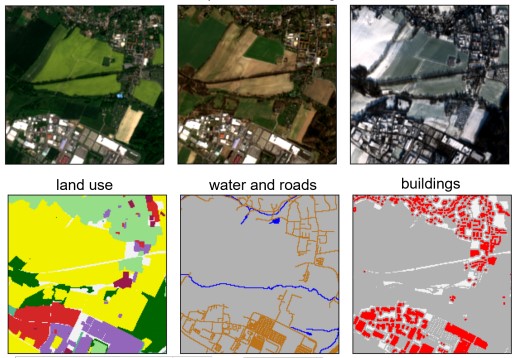

Remote sensing data is plentiful, but downloading, organizing, and transforming large amounts of data into a format readily usable by modern machine learning methods is a challenging and labor-intensive task. We present the OpenSentinelMap dataset, which consists of 137,045 unique 3.7 km2 spatial cells, each containing multiple multispectral Sentinel-2 images captured over a 4 year time period and a set of corresponding per-pixel semantic labels derived from OpenStreetMap data. The labels are not necessarily mutually exclusive, and contain information about roads, buildings, water, and 12 land-use categories. The spatial cells are selected randomly on a global scale over areas of human activity, without regard to OpenStreetMap data availability or quality, making the dataset ideal for both supervised, semi-supervised, and unsupervised experimentation. To demonstrate the effectiveness of the dataset, we a) train an off-the-shelf convolutional neural network with minimal modification to predict land-use and building and road location from multispectral Sentinel-2 imagery and b) show that the learned embeddings are useful for downstream fine-grained classification tasks without any fine-tuning.